Airflow 3 and Airflow AI SDK in Action - Analyzing League of Legends

Learn Key Airflow 3 and AI Features Through a Practical League of Legends Data Project

Do I have enough data to use AI?

Imagine sitting in a meeting room, surrounded by your team and walls of dashboards. You can feel the tension in the air. Your team has spent months collecting terabytes of data, building pipelines, and fine-tuning infrastructure. The board meeting is tomorrow, and they’ll want to know why, despite millions invested in data systems, your AI initiatives haven’t delivered the promised results. This scenario plays out in thousands of companies right now, all asking the same question that Yaakov Bressler, Lead Data Engineer at Capital One, recently brought into sharp focus.

Source: LinkedIn

Source: LinkedIn

The numbers tell a haunting story: According to Gartner’s latest survey, less than half of AI projects ever make it into production. In meeting rooms across the globe, the same scene repeats with tragic predictability. Companies accumulate more data, build larger data lakes, and implement more sophisticated pipelines. Yet month after month, their AI initiatives remain stalled. The more they struggle to make AI work by gathering more data, the deeper they sink into this technical quicksand.

The truth? Most organizations already have sufficient data. What they lack is far more rare: human expertise. According to the IBM Global AI Adoption Index 2023, the shortage of AI skills has emerged as the primary barrier to AI adoption, significantly outweighing data concerns.

Let’s learn why the real challenge isn’t the volume of data, but the expertise needed to transform it into value. More importantly, let’s reveal how some organizations are finding their way out of this trap, and why the solution might be simpler than you think.

Many businesses operate under the assumption that massive amounts of data are essential for successful AI projects. The belief that more data equals better AI is widespread, but often misleading. In many cases, the quality and relevance of data outweigh quantity.

There’s a movement known as Small Data that emphasizes the use of well-curated, relevant datasets over large volumes of data. This approach recognizes that:

To delve deeper into the principles of Small Data, I highly recommend exploring the Small Data manifesto at https://www.smalldatasf.com.

Small Data, source: https://www.smalldatasf.com

Small Data, source: https://www.smalldatasf.com

In the realm of medical image analysis, acquiring large labeled datasets is often challenging due to privacy concerns, the need for expert annotations, and the rarity of certain conditions. The paper Not-so-supervised: a survey of semi-supervised, multi-instance, and transfer learning in medical image analysis, highlights this challenge and explores alternative learning strategies that thrive on smaller datasets, underlining that less is sometimes more:

The paper underscores how these techniques, combined with expert knowledge in data curation and annotation, enable effective AI models even with limited data. Their work demonstrates that focusing on highly relevant, carefully annotated examples can lead to better performance than simply amassing vast quantities of less pertinent data. This is particularly relevant in medical imaging, where the small data approach empowers AI to detect rare diseases, segment anatomical structures, and perform various diagnostic tasks with remarkable accuracy, even with limited labeled samples. This reinforces the idea that with strategic data selection and appropriate techniques, smaller can indeed be better.

The gaming industry provides an interesting example of how small, carefully curated datasets can drive significant advancements in AI. Generating engaging and playable game levels is a complex task, traditionally requiring extensive manual design. However, recent research demonstrates how AI, trained on relatively small datasets, can automate this process effectively.

The paper Level Generation for Angry Birds with Sequential VAE and Latent Variable Evolution tackles the challenge of procedurally generating levels for the popular game Angry Birds. Generating levels for physics-based puzzle games like Angry Birds presents unique difficulties. The levels must be structurally stable, playable, and offer a balanced challenge. Slight errors in object placement can lead to unstable structures, destroyed by the physics engine or trivial solutions, ruining the gameplay experience.

Angry Birds logo, source: Rovio press material

Angry Birds logo, source: Rovio press material

For the paper an approach using a sequential Variational Autoencoder (VAE) and a relatively small dataset of only 200 levels were used. Here’s how their approach with small data achieved good results:

Remarkably, their generator, trained on a dataset significantly smaller than those typically used for deep generative models, achieved a 96% success rate in generating stable levels. This demonstrates that by carefully choosing the right data representation and training techniques, even small datasets can empower AI to perform complex tasks in game development.

Levels generated by different generators, source: Level Generation for Angry Birds with Sequential VAE and Latent Variable Evolution

Levels generated by different generators, source: Level Generation for Angry Birds with Sequential VAE and Latent Variable Evolution

So again, not the amount of data was the hurdle but human expertise, to carefully choose and implement the right approach.

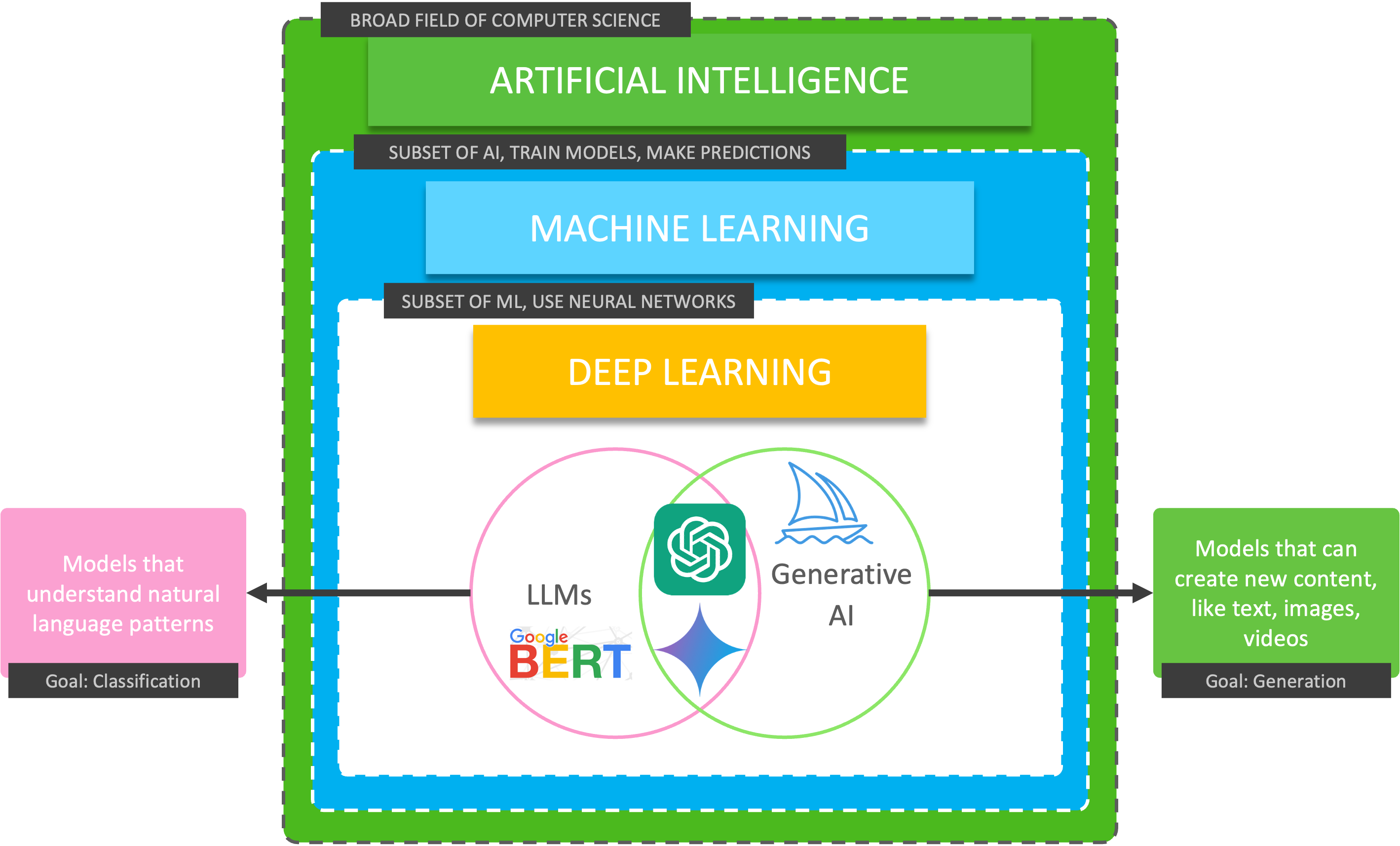

AI isn’t a monolithic field. It includes a wide range of topics—from traditional Machine Learning models like decision trees and random forests to advanced architectures such as Generative Adversarial Networks (GANs) and Large Language Models (LLMs).

AI is more than LLMs, source: by author

AI is more than LLMs, source: by author

Selecting the right tool for a specific task requires more than just access to data; it demands the technical expertise to understand the nuances of different models and their applicability. The Angry Birds level generation example showcased this perfectly, where success was driven by a clever approach to data representation and model selection.

A crucial, often overlooked, aspect of AI solutions is data preparation. Machine Learning algorithms learn from the data they are fed. For effective learning, this data must be clean, complete, and appropriately formatted. Data preparation for AI involves collecting, cleaning, transforming, and organizing raw data into a format suitable for Machine Learning algorithms. This is not a one-time task but a continuous process. As models evolve or new data becomes available, data preparation steps often need revisiting and refinement.

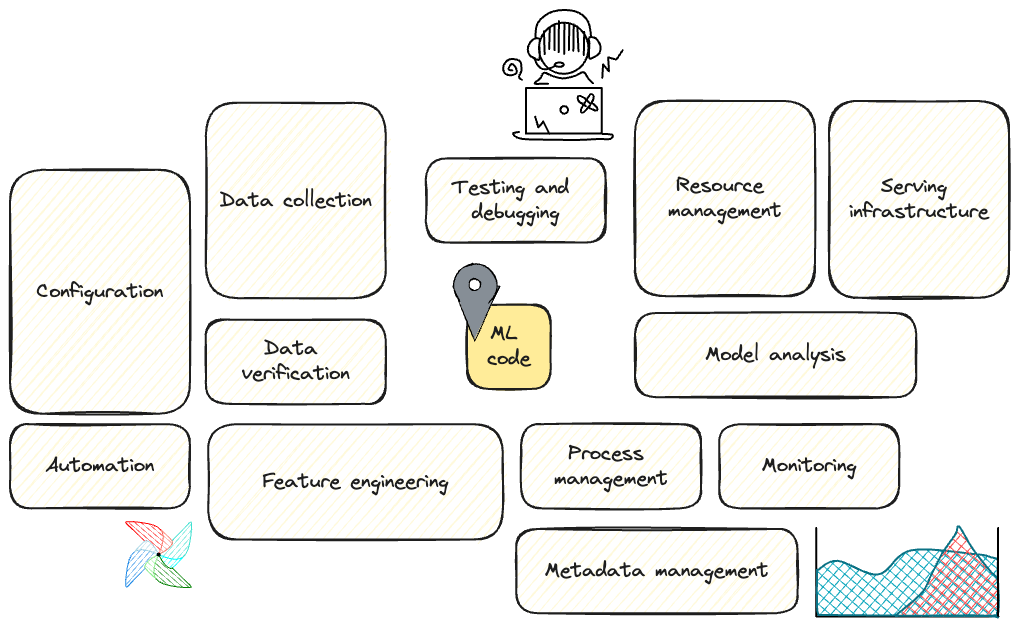

Furthermore, the entire AI pipeline, from data preparation and model training to prediction and monitoring, requires careful orchestration. As illustrated in the paper Hidden Technical Debt in Machine Learning Systems, the actual Machine Learning code represents only a small fraction of a real-world AI system.

By author, adapted from: Hidden Technical Debt in Machine Learning Systems

By author, adapted from: Hidden Technical Debt in Machine Learning Systems

This complexity underscores why technical expertise is essential throughout the AI lifecycle. Effective implementation involves several critical steps:

Without the necessary technical expertise, businesses risk deploying AI solutions that are not only ineffective but potentially harm their objectives.

The demand for AI expertise is often outpaces creating a significant hurdle for businesses seeking to implement AI solutions. As Yaakov mentioned, human capital is the limitation. To address this gap, a variety of low-code and no-code AI platforms have emerged, offering varying degrees of simplification. Some provide intuitive drag-and-drop interfaces, while others still require some level of Python knowledge. To illustrate the spectrum of these platforms, let’s explore representative examples from major cloud providers—Google Cloud Platform (GCP), Amazon Web Services (AWS), and Microsoft Azure—as well as some notable independent solutions.

Google Cloud Platform (GCP) offers a comprehensive suite of AI/ML tools under the Vertex AI umbrella, for a wide range of use cases. Vertex AI itself is a unified platform for building, deploying, and managing Machine Learning models. It encompasses various services, including:

One advantage of Vertex AI Pipelines compared with other cloud provider is, that it embraces open-source technologies, supporting pipeline formats from Kubeflow and TensorFlow Extended (TFX).

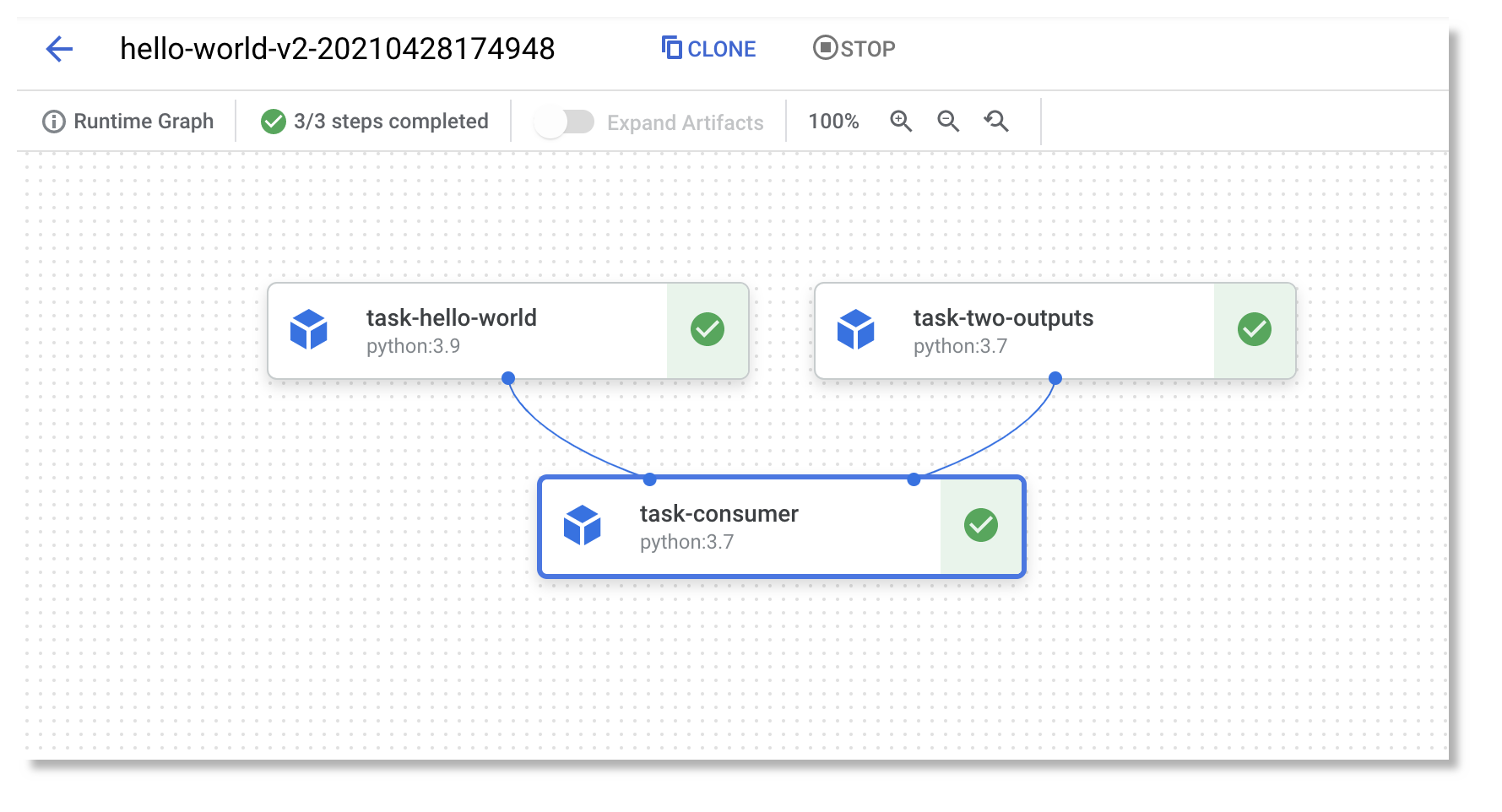

Focusing on Kubeflow Pipelines, these are constructed from individual components that can have inputs and outputs, linked together using Python code. These components can be pre-built container images or simply Python functions. Kubeflow automates the execution of Python functions within a containerized environment, eliminating the need for users to write Dockerfiles. This simplifies the development process and allows for greater flexibility in building and deploying machine learning pipelines.

Hello world component with the Kubeflow Pipelines (KFP) SDK:

import json

from typing import NamedTuple

from google.cloud import aiplatform

from kfp import compiler, dsl

from kfp.dsl import component

@component(base_image="python:3.9")

def hello_world(text: str) -> str:

print(text)

return text

compiler.Compiler().compile(hello_world, "hw.yaml")After the workflow of your pipeline is defined, you can proceed to compile the pipeline into YAML format. The YAML file includes all the information for executing your pipeline on Vertex AI Pipelines.

Simple Vertex AI pipeline, source: by author

Simple Vertex AI pipeline, source: by author

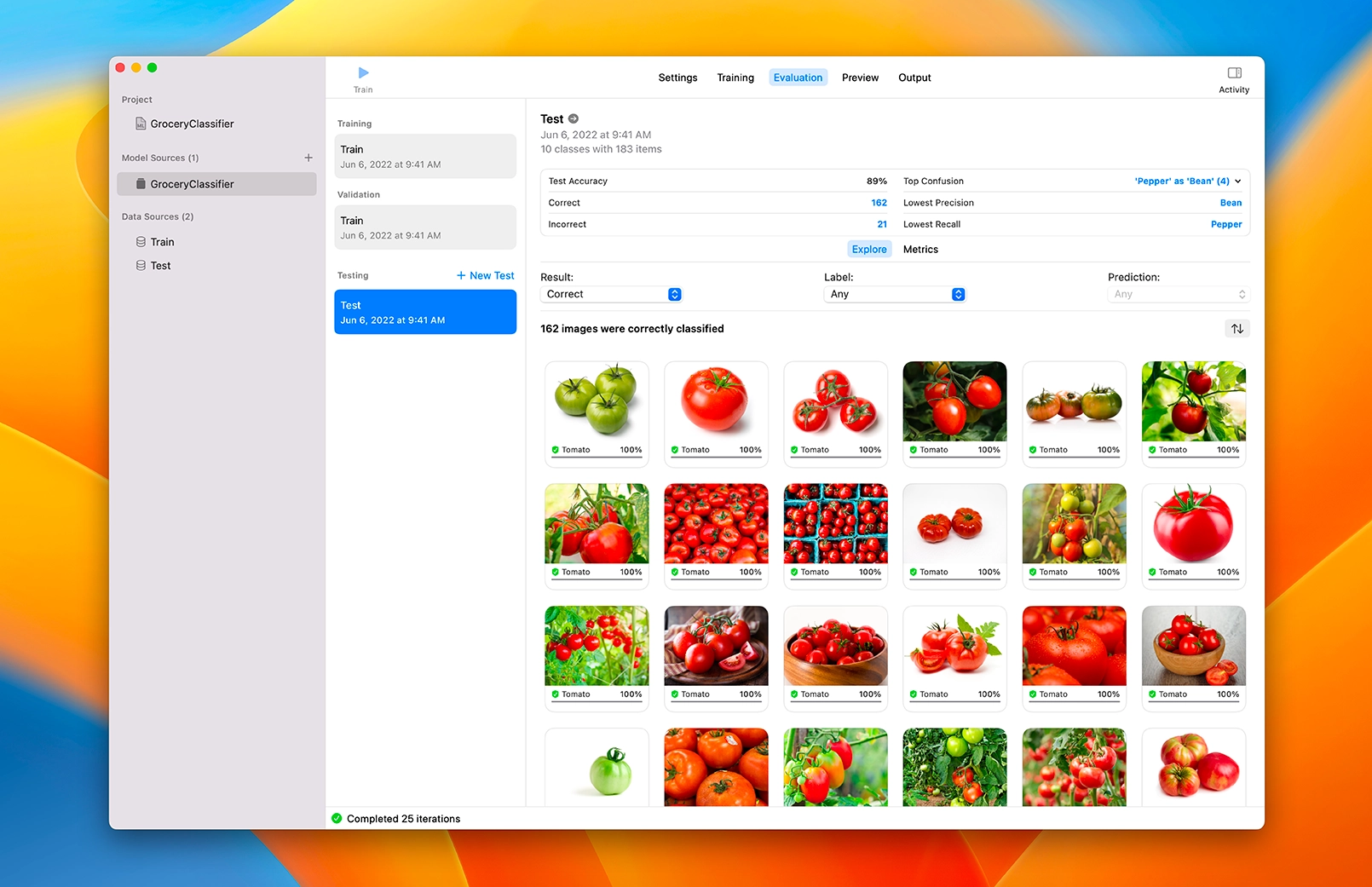

Another AI service is AutoML, GCP’s low-code offering. AutoML empowers users with limited Machine Learning expertise to train high-quality models for various data types (image, text, tabular, video, etc.). Its interface helps automating tasks like feature engineering, model selection, and hyperparameter tuning. This reduces development time and effort, making AI accessible to a broader audience.

Recently, the Vertex AI Agent Builder has emerged as a powerful tool for building conversational AI applications, allowing for easy integration with Large Language Models (LLMs) and backend services via a declarative no-code approach. Agents provide features like conversation history management, context awareness, and integration with other GCP services.

While AutoML offers a streamlined, no-code experience, Vertex AI in general provides the flexibility for custom model development and more intricate AI workflows, such as with Pipelines. Choosing the right approach depends on the project’s specific requirements, the available technical expertise, and the desired level of control over the model.

Amazon Web Services (AWS) provides SageMaker Canvas, a visual, no-code Machine Learning tool. It allows business analysts and other non-technical users to generate accurate predictions without writing any code. Users can access and combine data from various sources, automatically clean and prepare the data, and build, train, and deploy models — all through a user-friendly interface.

SageMaker Canvas tackles the entire Machine Learning workflow with a no-code approach: data access and preparation, model building, training, and deployment. It also simplifies the evaluation process with explainable AI insights into model behavior and predictions, promoting transparency and trust. While highly accessible, SageMaker Canvas might have limitations regarding customization and integration with more complex AI workflows. Its primary strength lies in empowering non-technical users to leverage Machine Learning for generating predictions and insights without needing coding skills. For more advanced AI development and deployment, AWS offers other SageMaker tools.

Microsoft Azure offers the Machine Learning Designer, a drag-and-drop interface for building and deploying Machine Learning models. This low-code environment enables users to visually create data pipelines, experiment with different algorithms, and deploy models without extensive coding. The Designer supports a wide range of algorithms and data sources, providing flexibility for various Machine Learning tasks.

Similar to other low-code/no-code platforms, Azure Machine Learning Designer simplifies the AI development process, lowering the barrier to entry for users with limited coding experience. Its visual interface promotes experimentation and collaboration, allowing teams to iterate quickly on their AI solutions.

Designer drag and drop, source: https://learn.microsoft.com/en-us/azure/machine-learning/concept-designer

Designer drag and drop, source: https://learn.microsoft.com/en-us/azure/machine-learning/concept-designer

Azure Machine Learning Designer offers a flexible approach to building ML pipelines, supporting both classic prebuilt components (v1) and newer custom components (v2). While these component types aren’t interchangeable within a single pipeline, custom components (v2) provide significant advantages for new projects. They enable encapsulating your own code for seamless sharing and collaboration across workspaces.

With this change, the flexibility for this platform increased a lot, so it is worth taking a look for companies utilizing Azure already.

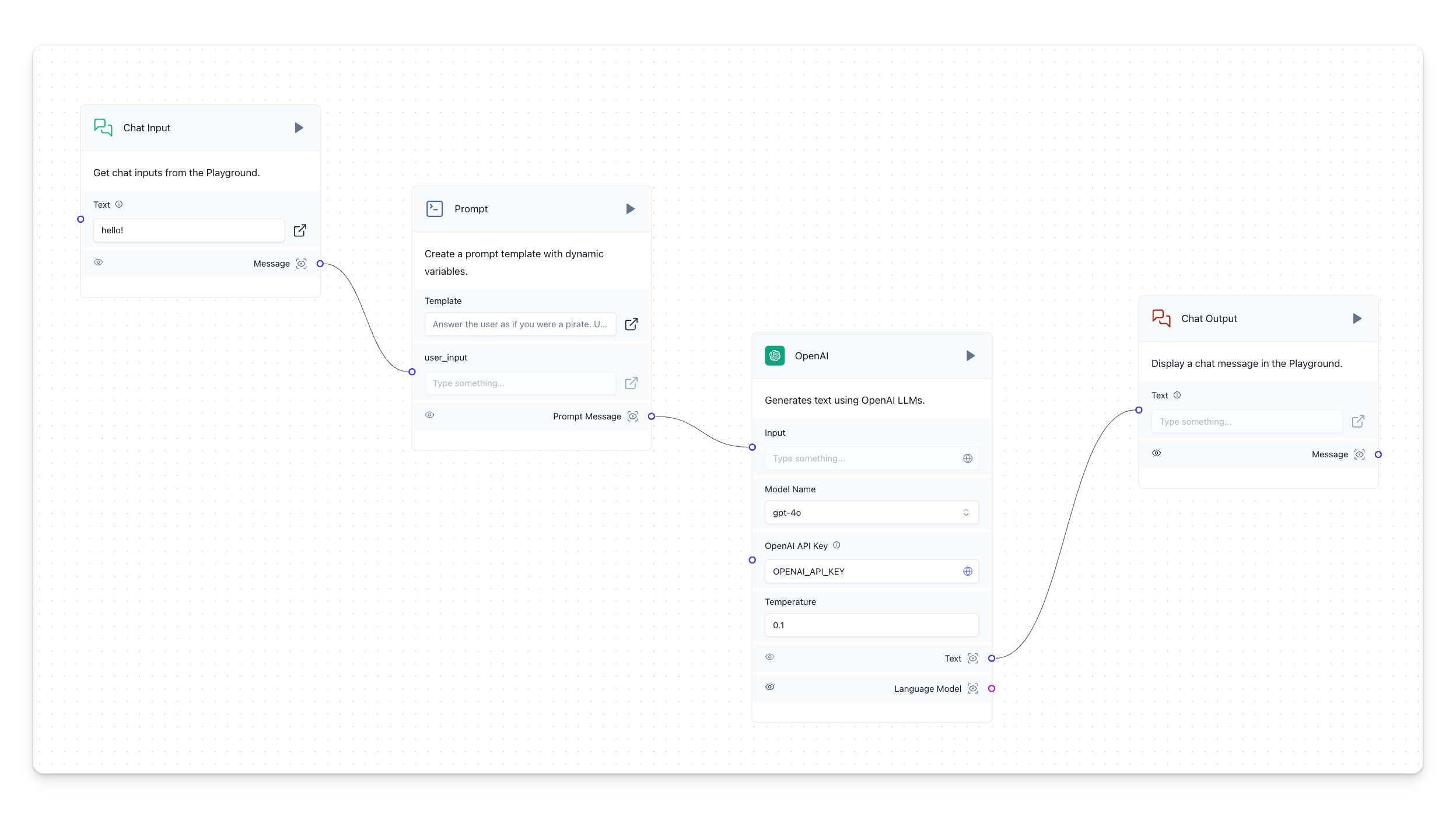

Beyond the major cloud platforms, more and more open-source and proprietary solutions emerge to address various AI needs. These tools offer diverse functionalities and levels of abstraction. Here are a few noteworthy examples, to give you some inspiration:

Create ML, source: Create ML

Create ML, source: Create ML

Basic prompting flow in LangFlow, source: by author

Basic prompting flow in LangFlow, source: by author

Another fast evolving area of low-code AI solutions are SQL integrations.

Example:

-- Train your model

CREATE SNOWFLAKE.ML.FORECAST my_model(

INPUT_DATA => TABLE(my_view),

TIMESTAMP_COLNAME => 'my_timestamps',

TARGET_COLNAME => 'my_metric'

);

-- Generate forecasts using your model

SELECT * FROM TABLE(my_model!FORECAST(FORECASTING_PERIODS => 7));Example:

-- Create remote model for Gemini 1.5 Flash

CREATE OR REPLACE MODEL `gemini_demo.gemini_1_5_flash`

REMOTE WITH CONNECTION `us.gemini_conn`

OPTIONS (endpoint = 'gemini-1.5-flash')

-- Use ML.GENERATE_TEXT to perform text generation

SELECT *

FROM

ML.GENERATE_TEXT(

MODEL `gemini_demo.gemini_1_5_flash`,

(SELECT 'Why is Data Engineering so awesome?' AS prompt)

);OpenAI offers a suite of tools that cater to a range of AI development needs. For low-code development, custom GPTs provide a user-friendly way to build tailored versions of ChatGPT for specific tasks without writing code. While custom GPTs simplify interaction with the model, OpenAI also provides APIs and tools for more advanced development and customization. This combination of low-code options and robust APIs makes OpenAI’s platform suitable for a diverse user base, from those seeking quick solutions to developers building complex AI applications.

These tools, along with frameworks like LangChain for building LLM-powered applications, represent the rapid evolution of the low-code AI landscape. They empower also smaller data teams, to create sophisticated AI solutions.

However, there are things to keep in mind, no matter which tool you choose:

Talking about the “why”: feel free to read my article about Solving a Data Engineering task with pragmatism and asking WHY? 😉.

When Wayfair, one of the world’s largest online destinations for home furnishings, needed to improve their Machine Learning capabilities, they faced a familiar challenge: plenty of data but complex AI infrastructure needs. Using Google Cloud’s Vertex AI, they achieved remarkable improvements in their ML operations, running large model training jobs 5-10x faster than before.

We’re doing ML at a massive scale, and we want to make that easy. That means accelerating time-to-value for new models, increasing reliability and speed of very large regular re-training jobs, and reducing the friction to build and deploy models at scale

explains Matt Ferrari, Head of Ad Tech, Customer Intelligence, and Machine Learning at Wayfair.

This success story demonstrates how the right tools can dramatically improve AI implementation, even with existing data. It’s not about having more data—it’s about having the right expertise and tools to effectively use what you have.

The AI bottleneck is not data, it’s people. The question shouldn’t be “Do I have enough data to use AI?” but rather “Do I have the necessary expertise to use AI effectively?” While data is a fundamental component, human capital is the key to unlocking AI’s full potential.

tl;dr:

By acknowledging and addressing the human expertise bottleneck, businesses can more effectively use AI, driving innovation and achieving meaningful outcomes.

Big thanks to Yaakov Bressler for the inspiration!